Motivation¶

In the following section, the Limitations of Lima1 and the Goals for Lima2 are discussed.

Analysis and goals¶

Distributed DAQ and processing¶

Analysis¶

High performance detectors generate more data than a single backend computer can handle in terms of both data acquisition and processing. Lima1 being a monolithic (single process) solution, it does not scale well.

Goal¶

Support High performance detectors with a horizontally scalable solution. A distributed system, e.g. a cluster of acquisition and processing computer is considered.

Most of the high performance detectors are built by aggregating multiple modules. That means that two frame dispatching strategies are worth considering:

Full frame: each acquisition/processing node receives a full detector frame (including all modules)

Partial frame: each acquisition/processing node receives data from a single module

The distributed system should be flexible enough to allow a variety of acquisition and processing nodes topology such as:

Single acquisition computer, Multiple processing computers

Multiple acquisition with processing computers

Multiple acquisition computers and Multiple processing computers

The trivial case of a single computer for acquisition and processing should also be supported.

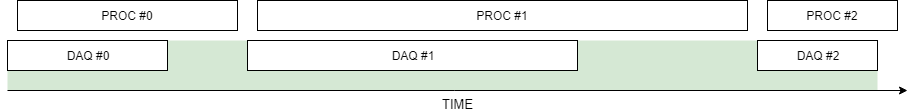

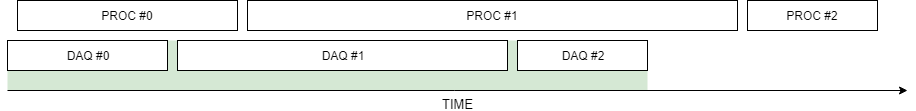

Decoupled DAQ and processing¶

Analysis¶

With Lima1, Acquisition and Processing are coupled to the point that user applications need to wait that the processing of the frames of the current acquisition is finished before restarting a new acquisition.

Goal¶

Let start a new acquisition when the camera is ready without waiting for all the images to go through the full processing pipeline. Eventually a batch of acquisitions could be started and their associated processing differed to a later time, e.g. once all the data are in memory.

Coordinate transformation tracking¶

Analysis¶

With Lima1, coordinate transformation induced by the processing tasks is not formalized and it can be tricky to get back to the detector coordinate system when visualizing an image that has flipped, rotated and cropped, to set a ROI for instance.

Goal¶

Each processing task provides a matrix representing their affine transformation and those transformations can be combined to compute the coordinates of pixels and ROI in the detector coordinate system.

Memory management¶

Analysis¶

In Lima1, there is no global memory management, in particular for data generated in the processing pipeline (compressed images before saving). There is only one buffer allocation engine, the hardware buffers; this is inefficient because a lot of memory must be systematically allocated in order to guarantee the latencies in the processing tasks. The “In-Place” task functionality is not efficient when the resulting image is smaller than the original one. Hardware buffers might be “expensive” and/or “limited”, so they should only serve for the first processing stage. Finally, the “asynchronous” data retrieval is not systematically used, so the buffers implementing this functionality should be optional (see “Observation / Test points” below).

Goal¶

Buffer sizes at different stages should be dynamically adjusted. Usage of allocators should be formalized and generalized.

It would be desirable to formalize the management of different kind of memories, like GPU and FPGA. Page locking and NUMA (CPU affinity), and TLS might be also useful in high-performance applications.

Multiband image support¶

Analysis¶

With Lima1, only grayscale images are fully supported. Color images can be acquired with the so called Video mode.

Goal¶

Color (video) cameras and cameras with multiple energy discriminating thresholds (e.g. Dectris EIGER2) should be supported.

Resource monitoring¶

Analysis¶

With Lima1, there is no “global” resource (memory or CPU) monitoring.

Goal¶

Keep track of the buffer allocations and report to the application (control system). For example, this could help prevent buffer overrun if the scan can be paused.

Multiple parameter sets¶

Analysis¶

With Lima1, if parameters are modified while an acquisition is running, the configuration is inconsistent with the current acquisition:

ct.setBinning(2, 2)

ct.prepareAcq(2, 2)

ct.startAcq(2, 2)

setBinning(1, 1)

assert (ct.getBinning() == (2, 2)) // Oops

Goal¶

Provide a data structure for configuration that will have multiple instance for user (before preparation), effective hardware (after preparation), effective software (after pipeline creation).

Integration of third-party processing¶

Analysis¶

With Lima1, adding a new processing task (external operation) is not documented.

extOpt = ctControl.externalOperation()

self.__maskTask = extOpt.addOp(Core.MASK,

self.MASK_TASK_NAME,

self._runLevel)

Goal¶

Provide a C++ and Python API to extend the Pipeline with custom Processes. Libraries like PyFAI (that are implemented on GPU) should be supported. Process that have a distributed (MPI) implementation should be supported as well.

Sparse data¶

Analysis¶

With Lima1, only dense storage is supported for images.

Goal¶

Support sparse storage for grayscale images.

Incomplete data sets¶

Analysis¶

With Lima1, the lack of a frame in a sequence is not supported.

Goal¶

Cope with incomplete, sparse data sequences. The origin of the absence of a frame can be:

Fault or overrun condition (detector, processing)

Multiple fault tolerance policies should be offered

Veto mechanisms in data reduction

Discard useless data

The cause of the absence of data should be included in the metadata.

Multiple saving / streaming tasks¶

Analysis¶

With LIma1, saving supports only images (2d data) and is performed at a fixed stage in the processing pipeline. Lima does not support foreign data structure (1d data or tables) generate by tasks.

Goal¶

Multiple saving / streaming tasks can be inserted at different stages in the image processing pipeline. They can save raw or partially processed images, as well as byproducts of non 2D type like statistics or reduced 1D/0D data. The saving tasks may write on the same I/O (file) or on different ones.

The saving infrastructure should also include metadata, which can be:

At different moments:

Static (detector type, instrument name)

Per acquisition (Lima configuration, start timestamp)

Per frame (detector timestamp)

Asynchronous (monitoring, temperature, processing statistics)

From different sources:

Hardware generated (frame timestamps, temperature, dead-time)

Software generated (configuration, processing statistics)

Multiple observation / testing tasks¶

A new kind of (sink) task can serve for monitoring and retrieving data asynchronously at defined stages in the processing pipeline. Each observation point acts as a cache of that stage, with configurable history and sampling parameters.

Implementation improvements¶

Explicit state machine¶

No more

if (status == READY) && (previousStatus == RUNNING)soup!

Acquisition and detector have statuses which is an obvious sign of a state machine, if not multiple concurrent state machines.

Using an explicit state machines means that the software is driven by a state transition table and actions are executed when entering and leaving a state.

Prefer events to callbacks¶

Callbacks are executed in the context of the thread that call them, potentially the acquisition thread. A better approach is to provide a synchronization primitive that the applications use to handle events in its own threads.

Externalize the acquisition thread¶

The acquisition thread is currently part of the plugin implementation. In other words, it is the responsibility of the plugin to feed the ring buffer. It would be more flexible to have a common acquisition thread(s) and leave the specifics of “getting an image” to the plugin.

Hide vendor SDKs from users¶

Use the Pointer to implemenation idiom. Checkout the documentation for a thorough explanation on this pattern.

Simplify usage¶

Camera cam(parameters);

cam.setBitDepth(FIVE_BIT_AND_A_HALF);

HwInterface hw(cam);

Control ct(hw);

is now

simulator::cam(parameters);

cam.set_bit_depth(FIVE_BIT_AND_A_HALF);

Note you still have access to camera specific functionalities.

Simplify python binding¶

With introspection, most of the binding can be generated programmatically.

Boilerplate in the plugins code¶

Reduce repetitive code, using generative programming techniques.

Use established third-party libraries¶

Benefit from libraries developed, used and maintained by large communities. Examples are:

Boost

Adobe Stlab.Concurrency

MPI