Design¶

The following sections provide a big picture of Lima2 Design.

Library Layers¶

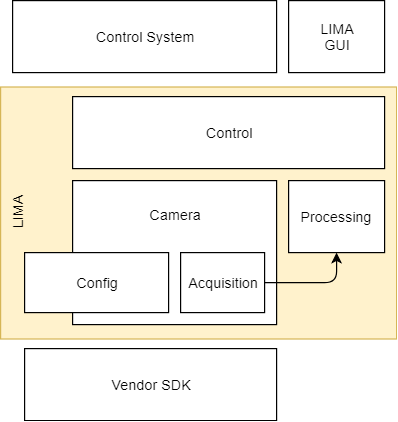

Lima2 is composed of 3 main layers that are further documented in their own sections:

the Camera layer is an abstraction layer that implement the detector specific code

the Processing layer control is a tasks system and image processing algorithms library used to build the processing pipeline

the Control layer, built on top of the previous two, is in charge of preparing and running the acquisition and building and executing the processing pipeline.

Distributed computing¶

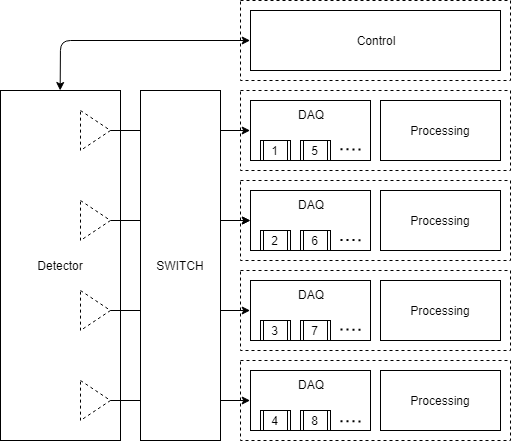

High performance detectors generate more data than a single acquisition backend can handle. The solution consists in running multiple coordinated acquisition backends, each backend supporting only part of dataflow.

Topologies¶

Multiple topologies and frame dispatching strategies are to be considered for different uses cases.

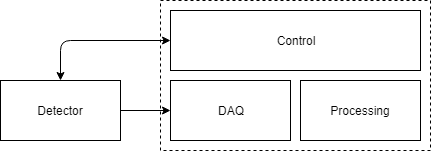

Single computer¶

Use case: Legacy cameras.

Distributed Processing¶

Use case: PCO Edge / Dectris Eiger2.

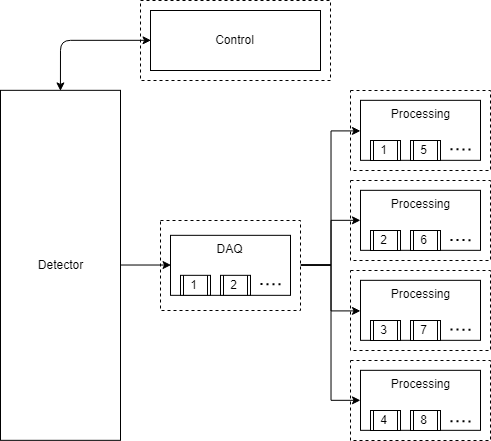

Distributed Acquisition with partial frame dispatching¶

Use case: PSI Eiger / Jungfrau.

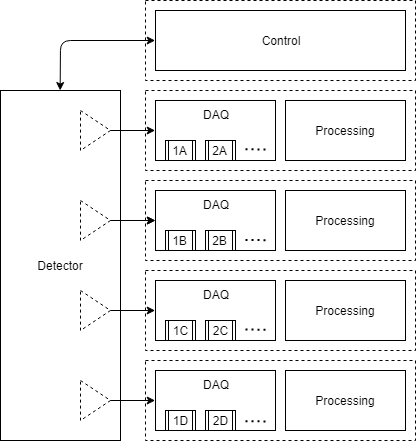

Distributed Acquisition with full frame dispatching¶

Use case: ESRF RASHPA / Smartpix.

MPI Introduction¶

Message Passing Interface (MPI) is a standardized message-passing library interface specification.

There are a lot of tutorials on MPI such as this excellent Introduction to distributed computing with MPI,only the basic concepts are recap in the following paragraphs.

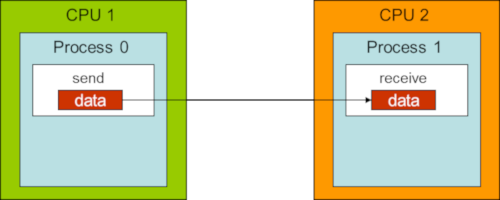

The message passing programming model¶

An MPI application is a group of autonomous processes, each executing its own code written in a classic language (C++, Python). All the program variables are private and reside in the local memory of each process. Each process has the possibility of executing different parts of a program. A variable is exchanged between two or several processes via a programmed call to specific MPI subroutines.

Multiple Data Single Program¶

In this configuration, every processes in the cluster run the same program. The program can have multiple facets or roles which are assigned according to process rank.

Multiple Data Multiple Program¶

MPI also have the ability run tasks that can be a different program that executes different part of the workflow. For example, one task can be a C++ program and another a python.

Communications¶

When a program is ran with MPI all the processes are grouped in what we call a communicator. Every communication is linked to a communicator, allowing the communication to reach different processes.

Point to Point¶

TODO.

MPI defines four communication modes:

Synchronous

Buffered

Ready

Standard

that exists in both blocking/nonblocking variants.

Collective¶

TODO.

MPI Streams¶

MPIStream is an extension of MPI that supports data streams, a sequence of data flowing between source and destination processes. Streaming is widely used for signal, image and video processing for its efficiency in pipelining and effectiveness in reducing demand for memory.

Resources¶

Papers¶

MPI Streams for HPC Applications

Talks¶

Talks that influenced the design of Lima2.

Image processing¶

Boost Generic Image Library (GIL)

Data structures¶

CppCon 2014: Herb Sutter “Lock-Free Programming (or, Juggling Razor Blades), Part I” CppCon 2014: Herb Sutter “Lock-Free Programming (or, Juggling Razor Blades), Part II”

General techniques¶

NDC 2017: Sean Parent “Better Code: Concurrency” C++Now 2018: Louis Dionne “Runtime Polymorphism: Back to the Basics”

Meta programming¶

C++Now 2017: Louis Dionne “Fun with Boost.Hana”

Types¶

CppCon 2016: Ben Deane “Using Types Effectively” Zero overhead utilities for more type safety